Two hosting methods

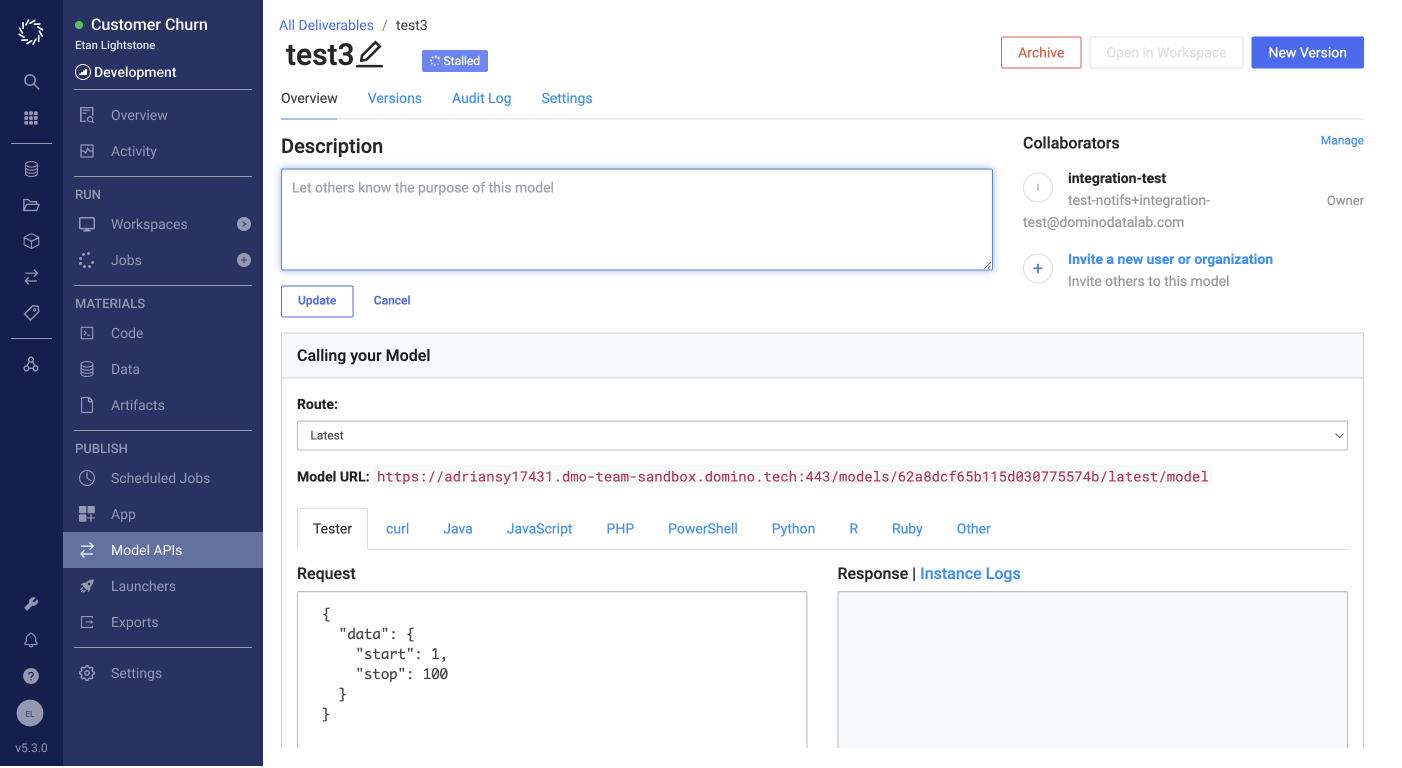

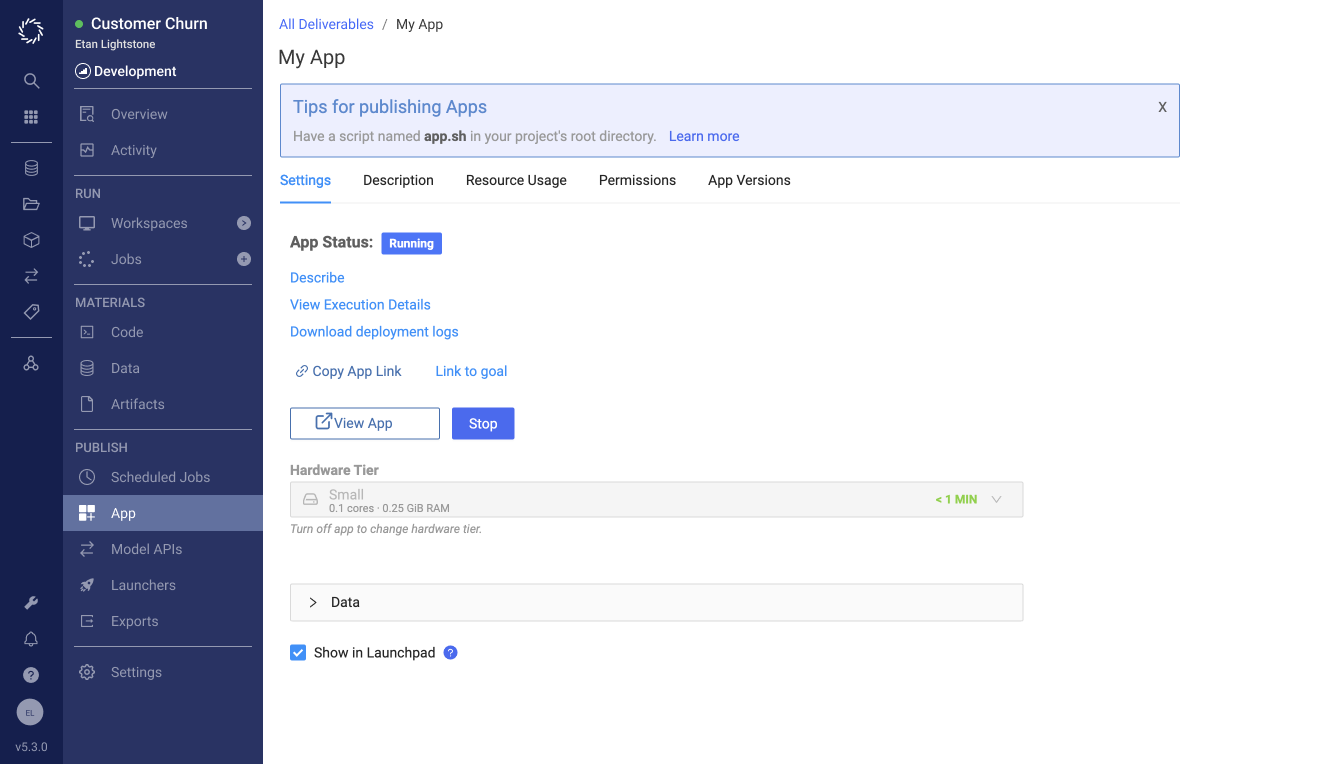

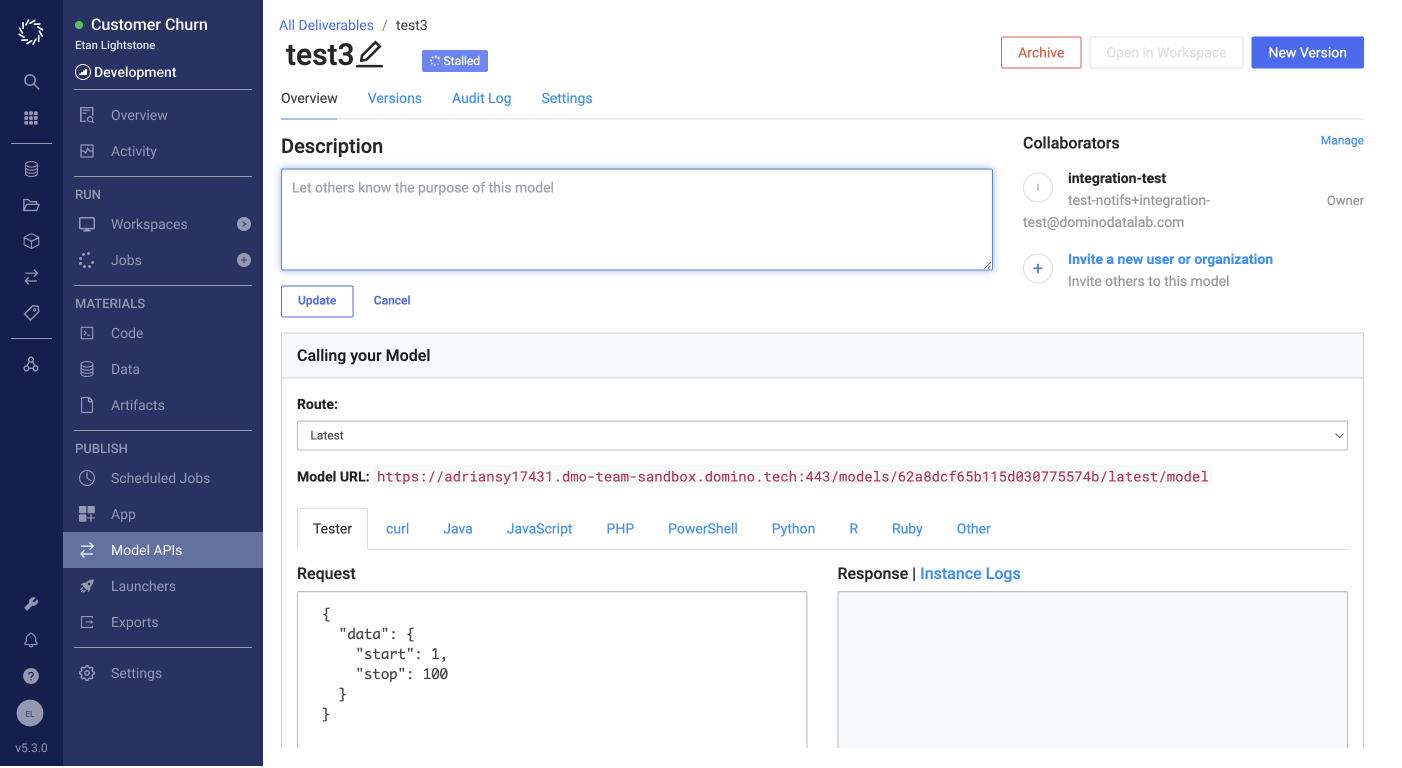

"Model APIs" and "Apps" are two ways of hosting and delivering models. The main difference is that Apps can be viewed in a web browser. However, in our product, the UI and config were completely different.

Allow product to scale to future features and personas

Led research and design. Built buy-in within the team. Proposed to leadership. Led initial project to create MVP.

The goal of Model Ops is to enable data science work to be delivered from a data science team to data science stakeholders. As we attempted to mature our offering, we were encountering some issues that pointed to us needing a more robust vision for the future of the product.

Issues:

"Model APIs" and "Apps" are two ways of hosting and delivering models. The main difference is that Apps can be viewed in a web browser. However, in our product, the UI and config were completely different.

I wanted to take a step back, understand user goals and workflows more deeply, and create a vision that would allow us to scale our product to new technologies, usecases, and personas while increasing the value we could provide to our customers and decreasing their time to value.

I started by interviewing data scientists to learn about how they approached delivering their work. I wanted to better understand their goals and workflows and, most importantly, how they interacted with other stakeholders inside and outside of their team to deliver their work to others in their organizations.

In terms of customer limitations, I identified two major problems with our existing approach:

For a data science team to provide value to the rest of their organization, they must deliver predictive models to other stakeholders in a way those stakeholders could consume. Our product was oriented around our implementation of a delivery method rather than on who needed to consume a model and why.

This was causing a number of issues:

The connection from doing data science work to delivering it was implicit. This was causing a number of problems:

As I thought through this, I saw that we had collapsed two user workflows into one. This combined UI was oriented around our implementations of hosting rather than around the underlying user goal of delivering a model to a specific model-consumer.

My next step was to map out the two user workflows so that I could separate them.

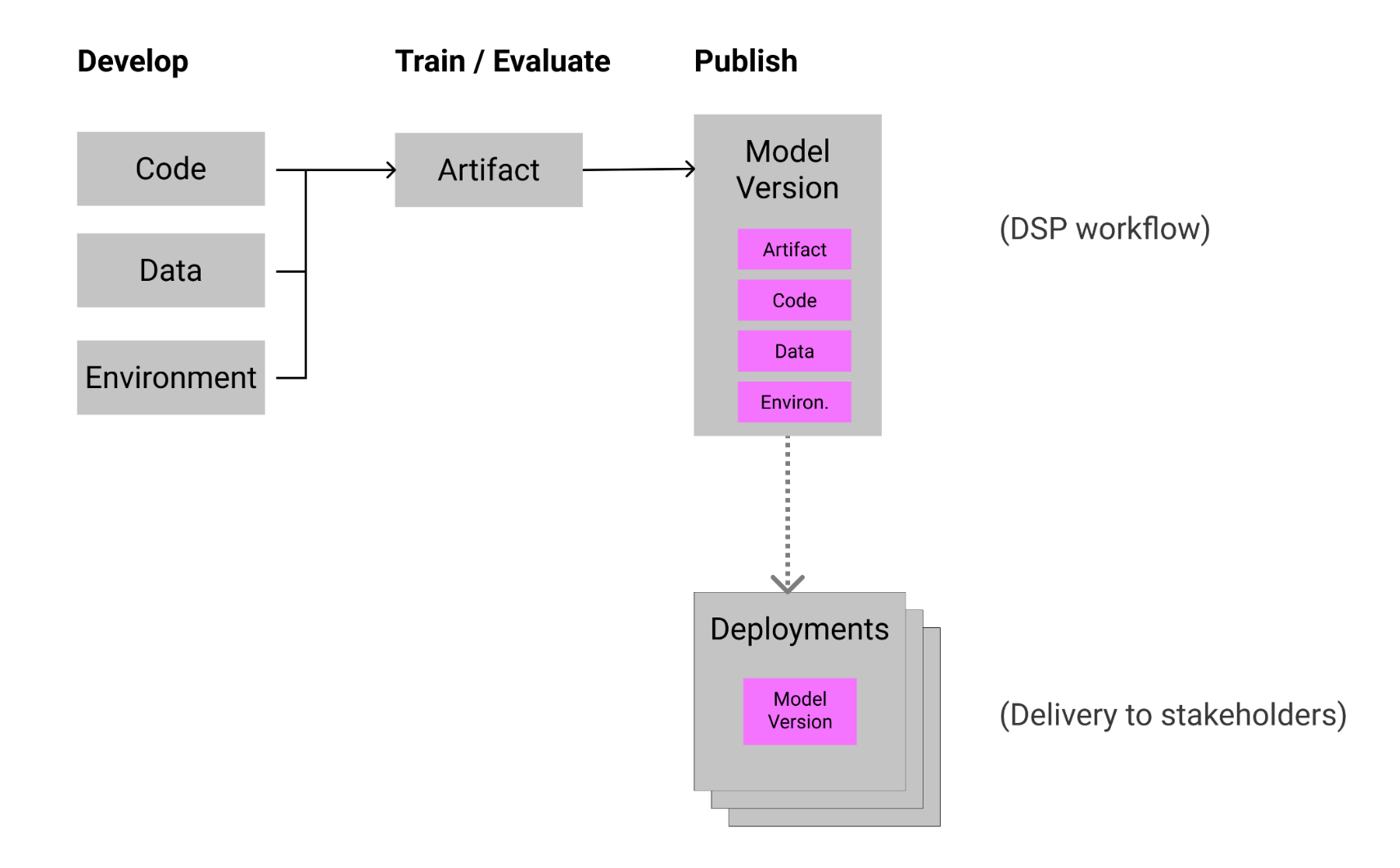

Data scientists develop models through an iterative process. The end of the process is creating a version of a model that can make predictions with accuracy. That version is deployed, and as time goes by, it encounters limitations that require it to be further iterated on and deployed again.

A given stakeholder consumes a given model in a relatively fixed way. A team that needs predictions will use the same resource over time, not caring or needing to know about the iteration that is happening internal to the data science team.

For example:

In all of these examples, the model consumer needs continuity. As long as the model works and can be found in the same place, it is irrelevant to them what updates and changes happen on the data science team's side.

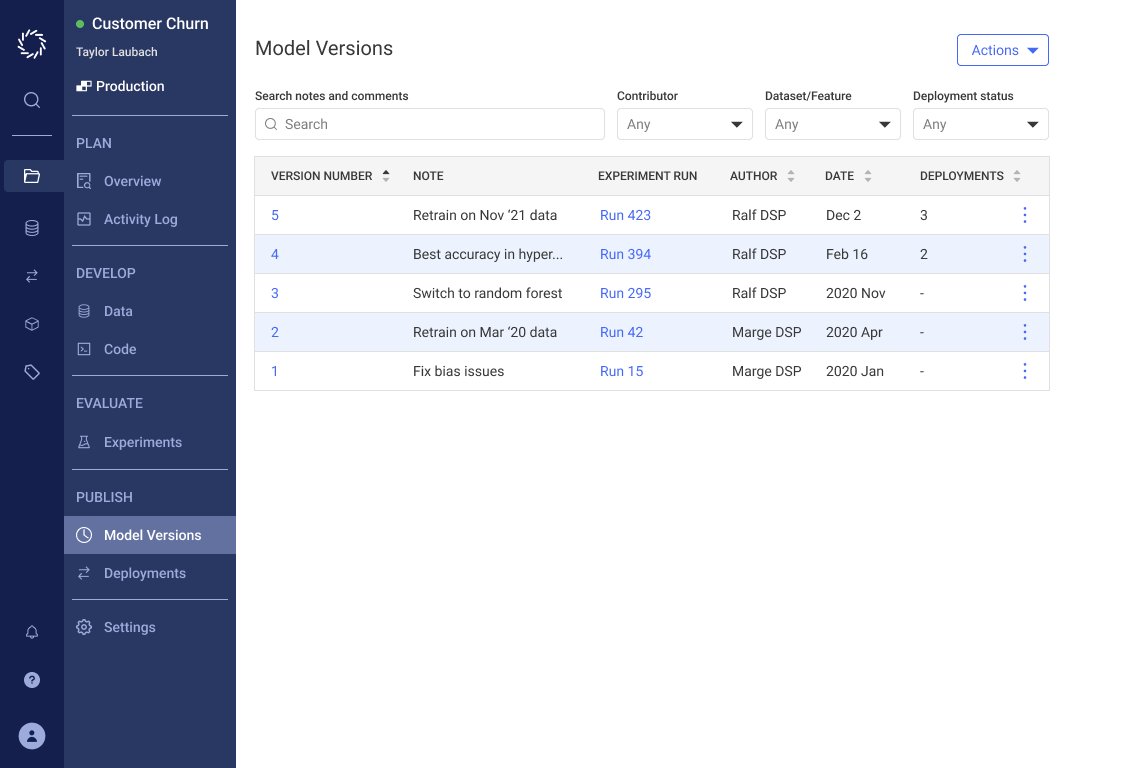

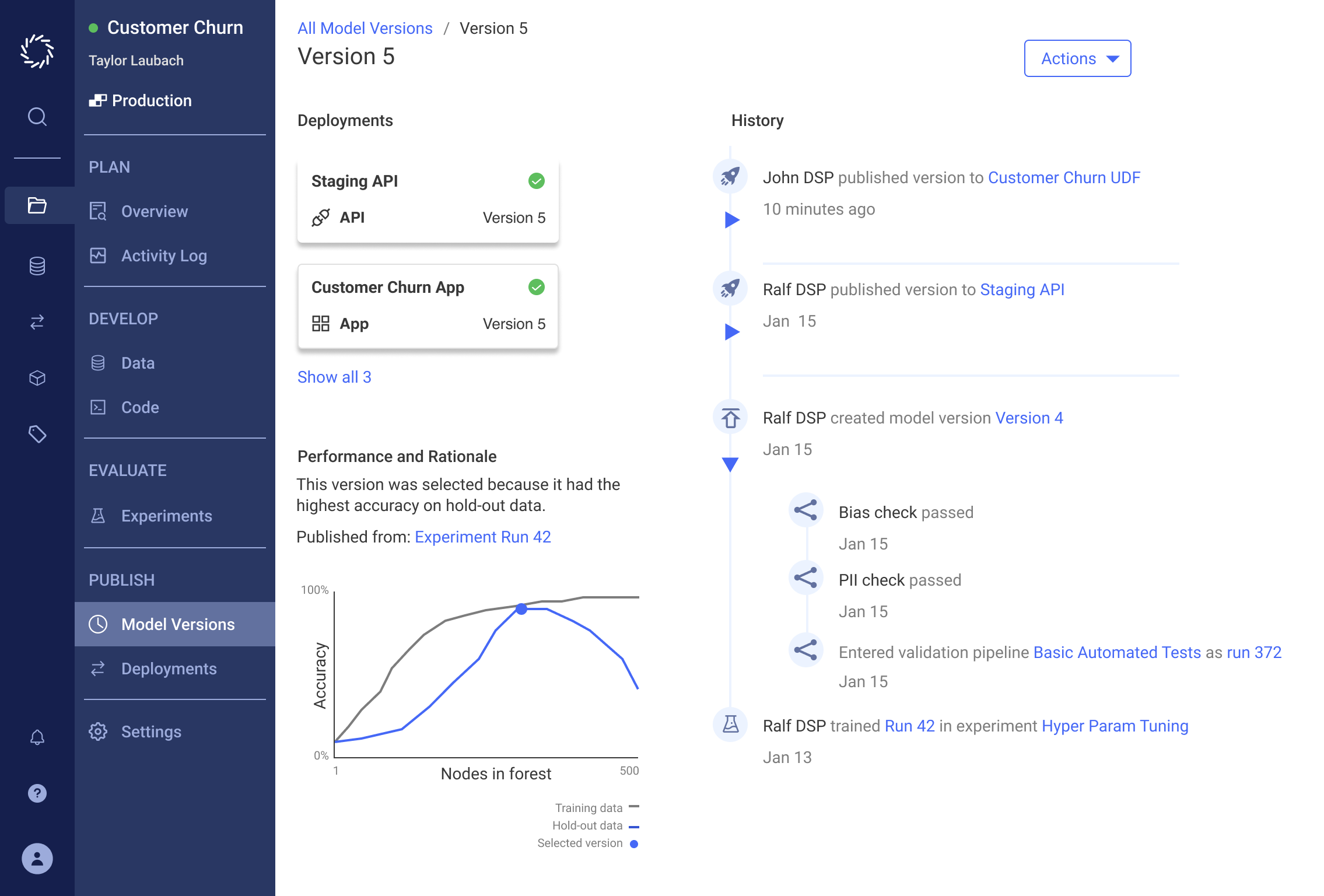

I created a design that solved these issues by 1. separating Versions into its own separate entity and 2. combining the various Hosting methods as config within a single entity. I went through 5 rounds of user testing and iteration. After each round, I showed my findings with PM and Engineering leads and we collaborated to refine the solution.

By the end, we had a design that was very successful in supporting the user goals and tasks we had identified. Engineers were excited because this outlined clean solutions to the technical limitations we had encountered. PMs were excited because the proposed design had a clear, low-effort path to scaling to future features and to new personas we hadn't supported before.

I worked with the PM and Eng leads to plan an incremental approach to implementing the vision. To make a case for prioritizing this work, they used some existing initiatives on both the Eng and Product sides. On the Product side, we had partnerships that would require us to scale to new deployment types, and on the Engineering side we had a tech debt initiative that would require rewriting some UIs and the underlying APIs.

My solution oriented around the two workflows outlined above, creating one entity (and corresponding section of IA) for each workflow.

One portion of the UI was focused on managing the connection from model development to deployment.

It created an explicit model version object that could be moved between deployments and reopened in other parts of the product to analyze and iterate on.

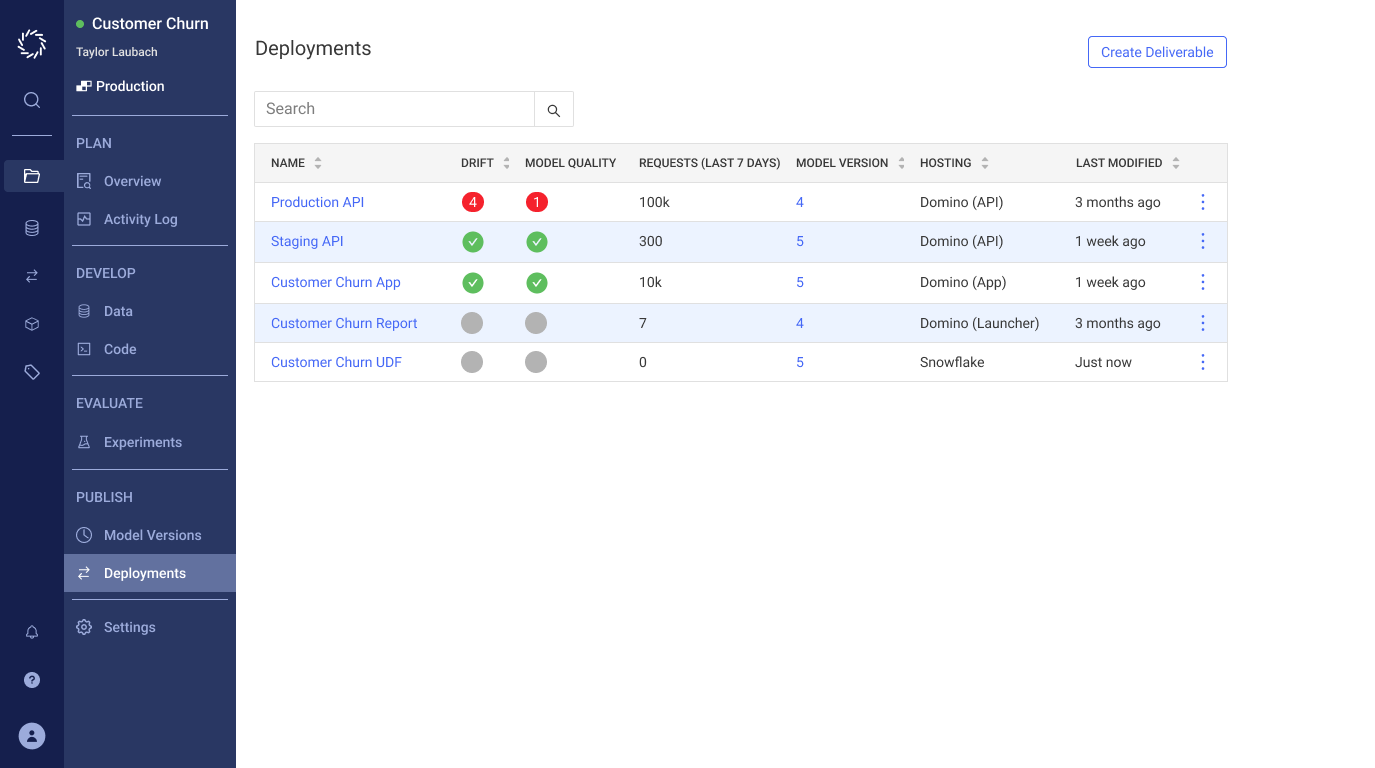

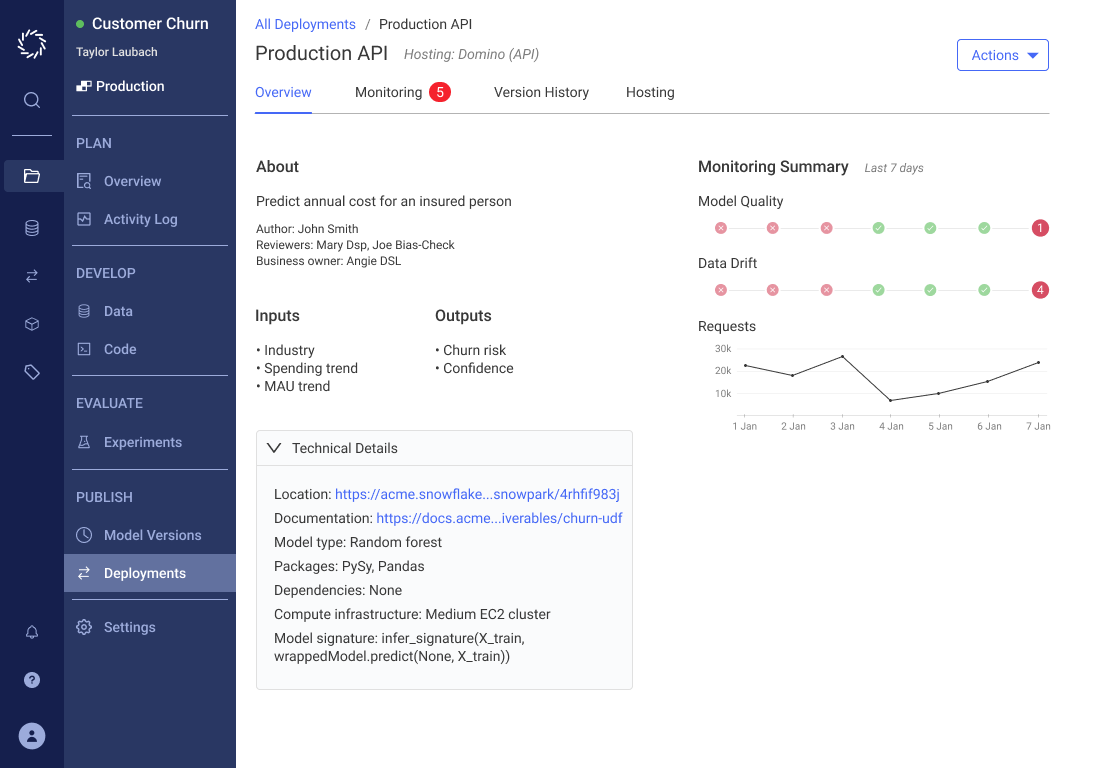

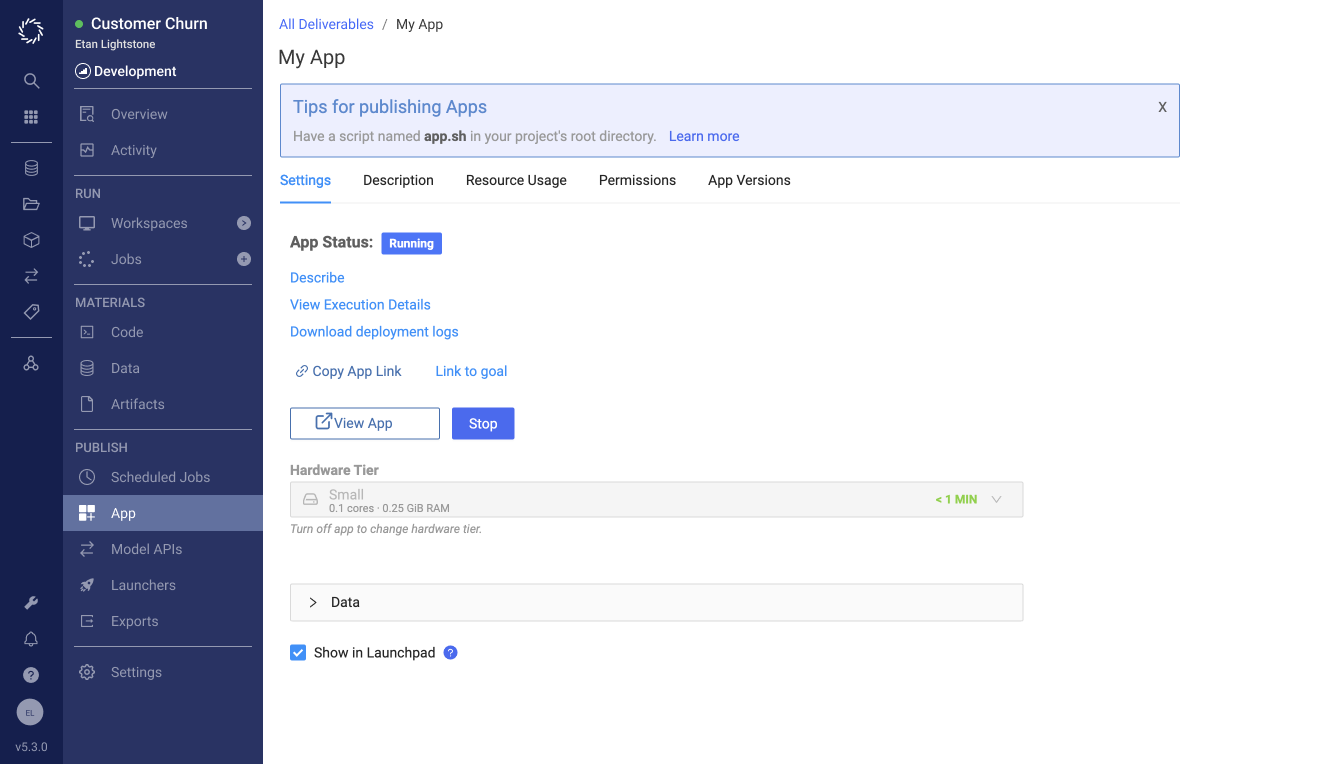

The other portion of the UI was focused on how models would get into the hands of model consumers.

Each deployment is a durable representation of a model being delivered, at a specific location for a specific usecase. There are clear indicators for whether it is being delivered successfully. A settings section contains the hosting-specific config, bringing consistency across different hosting types. This reduced complexity for users and allowed the UI to scale to any future hosting technology.